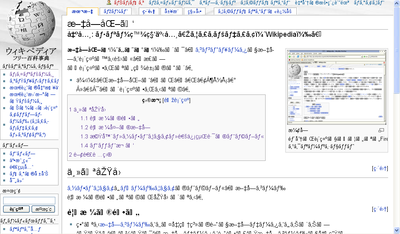

One of my favorite Japanese words is mojibake (文字化け) which literally translates as “character changing.” The term is used to describe an error experienced frequently by computers users who read and write non-Latin scripts — like Japanese. When readers of non-Latin scripts open a document, email, web page, or some other text, text is sometimes displayed mangled and unreadable. Japanese speakers refer to the resulting garbage as “mojibake.” Here’s a great example from the mojibake article in Wikipedia (the image is supposed to be in Japanese and to display the the Mojibake article itself).

The problem has been so widespread in Japanese that webpages would often place small images in the top corners of pages that say “mojibake.” If a user cannot read the content on the page, the image links to pages which will try to fix the problem for the user.

From a more technical perspective, mojibake might be better described as, “incorrect character decoding,” and it hints at a largely hidden part of the way our computers handle text that we usually take for granted.

Of course, computers don’t understand Latin or Japanese characters. Instead they operate on bits and bytes — ones and zeros that represent numbers. In order to input or or output text, computer scientists created mappings of letters and characters to numbers represented by bits and bytes. These mappings end up forming a sequence of characters or letters in a particular order often called a character set. To display two letters, a computers might ask for the fifth and tenth characters from a particular set. These character sets are codes; they map numbers (i.e., positions in the list) to letters just as Morse code maps dots and dashes to letters. Letters can be converted to numbers by a computer for storage and then converted back to be redisplayed. The process is called character encoding and decoding and it happens every time a computer inputs or outputs text.

While there may be some natural orderings, (e.g., A through Z), there are many ways to encode or map a set of letters and numbers (e.g., Should one put numbers before letters in the set? Should capital and lowercase letters be interspersed?). The most important computer character encoding is a ASCII which was first defined in 1963 and is the de facto standard for almost all modern computers. It defines 128 characters including the letters and numbers used in English. But ASCII says nothing about how one should encode accented characters in Latin, scientific symbols, or the characters in any other scripts — they are simply not in the list of letters and numbers ASCII provides and no mapping is available. Users of ASCII can only use the characters in the set.

Left with computers unable to represent their languages, many non-English speakers have added to and improved on ASCII to create new encodings — different mappings of bits and bytes to different sets of letters. Japanese text can frequently be found in encodings with obscure technical names likes EUC-JP, ISO-2022-JP, Shift_JIS, and UTF-8. It’s not important to understand how they differ — although I’ll come back to this in a future blog post. It’s merely important to realize that these each represents different ways to map a set of bits and bytes into letters, numbers, and punctuation.

For example The set of bytes that says “文字化け” (the word for “mojibake” in Japanese) encoded in UTF-8 would show up as “��絖�����” in EUC-JP, “������������” in ISO-2022-JP, and “æ–‡å—化㑔 in ISO-8859-1. Each of the strings above is a valid decoding of identical data — the same ones and zeros. But of course, only the first is correct and comprehensible by a human. Although the others are displaying the same data, the data is unreadable by humans because it is decoded according to a different character set’s mapping! This is mojibake.

For every scrap of text that a computer shows to or takes from a human, the computer needs to keep track of the encoding the data is in. Every web browser must know the encoding of the page it is receiving and the encoding that it will be displayed to the user in. If the data sent is a different format than the one that will be displayed, the computer must convert the text from one encoding to another. Although we don’t notice it. Encoding metadata is passed along with almost every webpage we read and every email we send. Data is being converted between encodings millions of times each day. We don’t even notice that text is encoded — until it doesn’t decode properly.

Mojibake makes this usually invisible process extremely visible and provides an opportunity to understand that our text is coded — and how. Encoding introduces important limitations — it limits our expression to the things that are listed in pre-defined character sets. Until the creation of an encoding called Unicode, one couldn’t mix Japanese and Thai in the same document; while there were encodings for both, there were no character sets that encoded the letters for both. Apparently, in Chinese, there are older more obscure characters that no computers can encode yet. Computer users simply can’t write these letters on computers. I’ve seen computers users in Ethiopia emailing each other in English because support for Amharic encodings at the time was so poor and uneven! All of these limits, and many more, are part and parcel of our character encoding systems. They become visible only when the usually invisible process of character encoding is thrust into view. Mojibake provides one such opportunity.