Revealing errors are a way of looking at the fact that a technology’s failure to deliver a message can tell us a lot. In this way, there’s an intriguing analogy one can draw between revealing errors and censorship.

Censorship doesn’t usually keep people from saying or writing something — it just keeps them from communicating it. When censorship is effective, however, an audience doesn’t realize that any speech ever occurred or that any censorship has happened — they simply don’t know something and, more importantly perhaps, don’t know that they don’t know. As with invisible technologies, a censored community might never realize their information and interaction with the world is being shaped by someone else’s design.

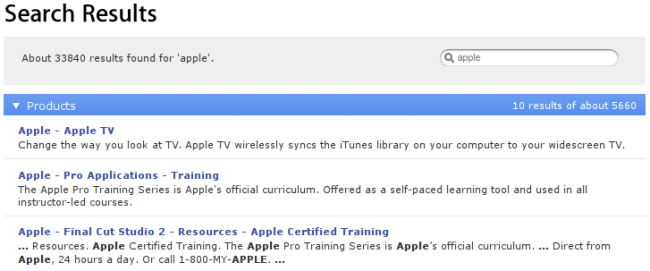

I once was in an cafe with a large SMS/text message “board.” Patrons could send an SMS to a particular number and it would be displayed on a flat-panel television mounted on the wall that everyone in the restaurant could read. I tested to see if there was a content filter and, sure enough, any message that contained a four-letter word was silently dropped; it simply never showed up on the screen. As the censored party, the failure of my message to show up on the board revealed a censor. Further testing and my success in posting messages with creatively spelled profanity, numbers instead of letters, and the construction of crude ASCII drawings revealed the censor as a piece of software with a blacklist of terms; no human charged with blocking profanity would have allowed “sh1t” through. Through the whole process, the other patrons in the cafe, remained none-the-wiser; they never realized that the blocked messages had been sent.

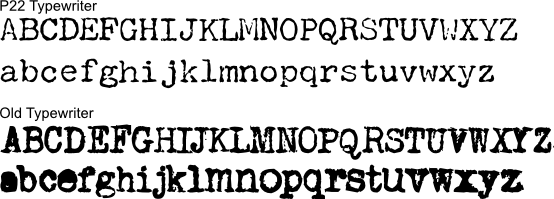

This desire to create barriers to profanity is widespread in communication technologies. For example, consider the number of times have you been prompted by a spellchecker to review and “fix” a swear word. Offensive as they may be, “fuck” and “shit” are correctly spelled English words. It seems highly unlikely that they were excluded from the spell-checker’s wordlist because the compiler forgot them. They were excluded, quite simply, because their were deemed obscene or inappropriate. While intentional, these words’ omission results in the false identification of all cursing as misspelling — errors we’ve grown so accustomed to that they hardly seem like errors at all!

Now, unlike a book or website which more impressionable children might read, nobody can be expected to find a four-letter word while reading their spell-checking wordlist. These words are not included simply because our spell-checker makers think we shouldn’t use them. The result is that every user who writes a four-letter-word must add that word, by hand, to their “personal” dictionary — they must take explicit credit for using the term. The hope, perhaps, is that we’ll be reminded to use a different, more acceptable word. Every time this happens, the paternalism of the wordlist compiler is revealed.

Connecting back to my recent post on predictive text, here’s a very funny video of Armstrong and Miller lampooning the omission of four-letter words from predictive text databases that make it more difficult to input profanity onto mobile phones (e.g., are you sure you did not mean “shiv” and “ducking”?). You can also or download the video in OGG Theora if you have trouble watching it in Flash.

There’s a great line in there: “Our job … is to offer people not the words that they do use but the words that they should use.”

Most of the errors described on this blog reveal the design of technical systems. While the errors in this case do not stem from technical decisions, they reveal a set of equally human choices. Perhaps more interestingly, the errors themselves are fully intended! The goal of swear-word omission is, in part, the moment of reflection that a revealing error introduces. In that moment, the censors hope, we might reflect on the “problems” in our coarse choice of language and consider communicating differently.

These technologies don’t keep us from swearing any more than other technology designers can control our actions — we usually have the option of using or designing different technologies. But every technology offers affordances that make certain things easier and others more difficult. This may or not be intended but it’s always important. Through errors like those made by our prudish spell-checker and predictive text input systems, some of these affordances, and their sources, are revealed.