Medireview is a reference to what has become a classic revealing error. The error was noticed in 2001 and 2002 when people started seeing a series of implausibly misspelled words on a wide variety of websites. In particular, website authors were frequently swapping the nonsense word medireview for medieval. Eventually, the errors were traced back to Yahoo: each webpage containing medireview had been sent as an attachment over Yahoo’s free email system.

The explanation of this error shares a lot in common with previous discussions of the the difficulty of searching for AppleScript on Apple’s website and my recent description of the term clbuttic. Medireview was caused, yet again, by an overzealous filter. Like the AppleScript error, the filter was attempt to defeat cross site scripting. Nefarious users were sending HTML attachments that, when clicked, might run scripts and cause bad things to happen — for example, they might gain access to passwords or data without a user’s permission or knowledge. To protect its users, Yahoo scanned through all HTML attachments and simply removed any references to “problematic” terms frequently used in cross-site scripting. Yahoo made the follow changes to HTML attachments — each line shows a term that can be used to invoke a script and the “safe” synonym it was replaced with:

- javascript → java-script

- jscript → j-script

- vbscript → vb-script

- livescript → live-script

- eval → review

- mocha → espresso

- expression → statement

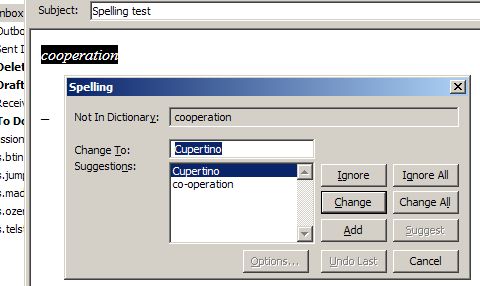

This caused problems because, like in the Clbuttic error, Yahoo didn’t check for word boundaries. This mean that any word containing eval (for example) would be changed to review. The term evaluate was rendered reviewuate. The term medieval was rendered medireview.

Of course, neither sender nor receiver knew that their attachments had been changed! Many people emailed webpages or HTML fragments which, complete with errors introduced by Yahoo, were then put online. The Indian newspaper The Hindu published an article referring to “medireview Mughal emperors of India.” Hundreds of others made similar mistakes.

The flawed script was in effect on Yahoo’s email system from at least March 2001 through July 2002 before the error was reported by the BBC, New Scientist and others.

Like a growing number of errors that I’ve covered here, this error pointed out the presence and power of an often hidden intermediary. The person who controls the technology one uses to write, send, and read email has power over one’s messages. This error forced some users of Yahoo’s system to consider this power and to make a choice about their continued use of the system. Quite a few stopped using Yahoo after this news hit the press. Others turned to other technologies, like public-key cryptography, to help themselves and others verify that their future messages’ integrity could be protected from accidental or intentional corruption.