Many of the gems from the newspaper correction blog Regret the Error qualify as a revealing errors. One particularly entertaining example was this Reuters syndicated wire story on the recall of beef whose opening paragraph explained that (emphasis mine):

Quaker Maid Meats Inc. on Tuesday said it would voluntarily recall 94,400 pounds of frozen ground beef panties that may be contaminated with E. coli.

Of course the article was talking about beef patties, not beef panties.

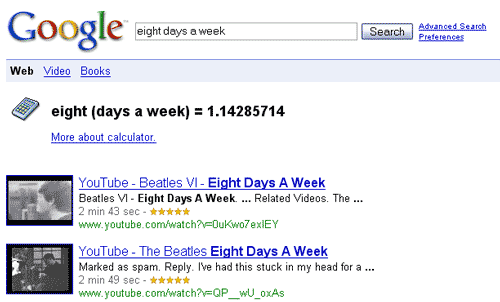

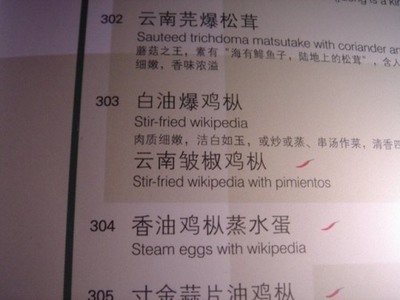

This error can be blamed, at least in part, on a spellchecker. I talked about spellcheckers before when I discussed the Cupertino effect which happens when someone spells a word correctly but is prompted to change it to an incorrect word because the spellchecker does not contain the correct word in its dictionary. The Cupertino effect explains why the New Zealand Herald ran a story with Saddam Hussein’s named rendered as Saddam Hussies and Reuters ran a story referring to Pakistan’s Muttahida Quami Movement as the Muttonhead Quail Movement.

What’s going on in the beef panties example seems to be a little different and more subtle. Both “patties” and “panties” are correctly spelled words that are one letter apart. The typo that changes patties to panties is, unlike swapping Cupertino in for cooperation, an easy one for a human to make. Single letter typos in the middle of a word are easy to make and easy to overlook.

As nearly all word processing programs have come to include spellcheckers, writers have become accustomed to them. We look for the red squiggly lines underneath words indicating a typo and, if we don’t see it, we assume we’ve got things right. We do so because this is usually a correct assumption: spelling errors or typos that result in them are the most common type of error that writers make.

In a sense though, the presence of spellcheckers has made one class of misspellings — those that result in a correctly spelled but incorrect words — more likely than before. By making most errors easier to catch, we spend less time proofreading and, in the process, make a smaller class of errors — in this case, swapped words — more likely than used to be. The result is errors like “beef panties.”

Although we’re not always aware of them, the affordances of technology changes the way we work. We proofread differently when we have a spellchecker to aid us. In a way, the presence of a successful error-catching technology makes certain types of errors more likely.

One could make an analogy with the arguments made against some security systems. There’s a strong argument in the security community that creation of a bad security system can actually make people less safe. If one creates a new high-tech electronic passport validator, border agents might stop checking the pictures as closely or asking tough questions of the person in front of them. If the system is easy to game, it can end up making the border less safe.

Error-checking systems eliminate many errors. In doing so, they can create affordances that make others more likely! If the error checking system is good enough, we might stop looking for errors as closely as we did before and more errors of the type that are not caught will slip through.